By Shannon Wolfman

This is the second post in a series about artificial intelligence, along with its uses and social/political implications.

If you’ve been following the news on climate change and artificial intelligence over the last few years, you might feel conflicted about the potential for AI to help us in combating global warming. For the most part, mainstream and tech publications either exalt AI as a climate savior or decry AI’s ever-increasing carbon footprint. When both issues are discussed in the same article, the focus is on whether AI is doing more harm than good for the environment.

But AI isn’t inherently carbon-intensive; it’s a tool that has tremendous capabilities for mitigating climate damage, and any technology is only as green as its power source. Considering AI’s climate costs and potential climate benefits as distinct issues and understanding the impacts they have on each other can lead to more coherent conversations about the role that this technology can and should play in our climate future.

So why does AI have such a big carbon footprint right now?

Let’s start with what artificial intelligence is–a field of computer science that basically refers to computer programs that can perform tasks that would typically require human intelligence. A sub-field of AI is machine learning, but as the technology is employed today, AI and machine learning can be thought of as synonyms. Machine learning uses large data sets and complicated math to quickly (compared to a human) make predictions or classifications. When people refer to training an AI, they’re talking about the process of finding a function that best describes a large and complex data set. From there, they can apply the AI to different data sets and generate predictions or classifications based on that very complicated math function.

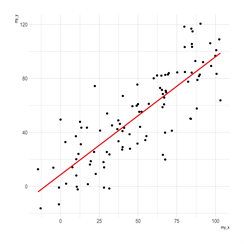

This is where the energy consumption and carbon footprint comes into play. If you have a graph in 2 dimensions, x and y, you can generate a line function to fit your data relatively quickly with statistical software (sample image from R Graph Gallery). You can do something similar with a more complex data set and 3 dimensions (x, y, and z), but the software, algorithms, and math are more complex (sample image from MATLAB).

Now imagine that instead of 2 or 3 dimensions, you have thousands of dimensions, or millions, or even billions. Finding an equation to fit the data on that many dimensions takes a lot of math and a lot of computing power, which means a lot of energy consumption. Therefore, as your model gets more complex, it gets more accurate (with diminishing returns), but it also uses much more energy.

Training the largest AI models can have a carbon footprint equivalent to the lifetime carbon footprint of five cars, and the trend in the field has been to make bigger and bigger AIs. But the reason that big AIs have such a huge carbon footprint isn’t that they use a lot of energy- it’s that they, like most of us globally, get their energy from burning fossil fuels. Using AIs with a lot of complexity can be super carbon intensive, but it doesn’t have to be. More on that in the next section.

We should also consider that most machine learning models are quite small and can run in seconds to hours on personal computers, so there’s a ton of variability in the energy requirements of different AIs. A computer science PhD student that I interviewed confirmed this, explaining that the carbon impact of most AI being used today is better compared to a day spent playing video games than to the lifetime emissions of a car. So we really shouldn’t think about AI as one thing that emits a set amount of carbon, we should distinguish between AIs at least in part based on their energy consumption and how that energy is produced.

We can also think about what the AIs are used for when discussing their emissions. For example, if a fossil fuel company is using a small AI with green electricity to more efficiently find oil deposits, the climate impact of that AI isn’t just limited to the energy it uses during training and deployment. Similarly, a medium energy-use AI that is used repeatedly for a climate-neutral purpose, like those used by Netflix to figure out which show you should watch next, will have a different carbon footprint than the same AI used toward a climate goal, even if the energy comes from the same source.

How can we make AI more sustainable?

The best way to make AI climate neutral would be to make all energy production carbon neutral. Fully transitioning to renewable energy sources or nuclear power would make all energy use, including the energy used by massively complex AIs, carbon neutral. But this solution will likely take a lot of time, dedicated political will, global cooperation, and technological advancement. So in the meantime, we should implement strategies to reduce the carbon cost of energy production in general and for AI use in particular.

For example, improving or further incentivizing green energy grids would make AI and all other electricity use more sustainable. A carbon tax would also further incentivize prioritizing low emissions in all sectors, including the training and deployment of AI, but the tax would need to be really high in order to be effective. Nearly anything related to green energy would also make AI greener. However, there are also technological and regulatory actions that apply more specifically to AI. For example, there is a big push in the field to develop more efficient hardware for computing in general and for AI use specifically. Advancements in this area could reduce time and energy costs of AI, and are therefore being pursued vigorously by researchers and corporations alike. Some states have even enacted their own energy efficiency regulations for computers. While better hardware could reduce energy use overall, that isn’t a guarantee. More efficient computing could just mean that more computing happens, using the same or even more energy. As long as electricity is cheap and not generated renewably, changes to hardware wouldn’t necessarily reduce carbon emissions.

Increasing the adoption of cloud computing, which uses the internet to run computer programs on hardware in data centers that exist somewhere else, can also reduce the energy costs of computing, including AI. Data centers are big buildings with lots of servers, data storage, and other computing stuff, and you can use data centers that are hooked up to green energy grids even if those grids don’t exist in the same physical location as the person using the AI. However, without sustainable data centers, cloud computing can’t be sustainable. Therefore, regulating data centers for energy efficiency and sustainability would reduce the carbon footprint of AI and all other computing, and may be the most promising avenue for making AI more sustainable in the near-term. The Energy Act of 2020 took some first steps here, empowering the Department of Energy and the Environmental Protection Agency to develop guidelines and best-practices. This entire post could be about all of the factors in considering specific energy regulations for data centers, but overall, this area is ripe for federal action, and companies are preparing for it.

Putting AI to use in solving climate challenges is another way that AI can be more sustainable. As discussed earlier, a totally carbon-neutral AI that’s used to find more oil deposits so that we can drill, process, and burn it for energy is not actually carbon neutral. If we use AI to reduce emissions, then the carbon benefits would likely outweigh the carbon costs.

How is AI used to fight climate change?

Using AI to combat climate change is still pretty new, and research is ongoing about how AI can be used for a whole host of problems, including predicting extreme weather events, developing next generation green energy, reducing fossil fuel impacts, and optimizing the systems we already have. To reduce fossil fuel impacts, researchers are developing AIs for detecting and predicting methane leaks from natural gas pipelines, with one group even collecting their own dataset by inducing controlled releases of methane at a testing facility that mimics real-life gas leaks.

To optimize existing systems, researchers are using AI to maximize the power generated from renewables. Solar farms can already adjust panel positions so they face the sun all day, but there are many other factors that affect how much energy can be harvested from the sun, like shade from other panels or from trees and the power required to move the panels themselves. Machine learning can optimize the positions of solar panels throughout the day, taking all of the identified factors into account, which increases the amount of power generated by the existing fleet of solar panels.

While AI use in mitigating climate change is still in its infancy, it’s already being used for things like making energy grids more efficient and optimizing the integration of renewable energy sources into existing power grids. Smart thermostats can adjust to user preferences over time to reduce energy consumption. AIs have also been used to reduce the carbon costs of freight shipping and to make climate models more efficient and accurate so they can provide us with better predictions. So while we don’t know the true potential of AI in combating climate change, we’re already seeing applications where it’s been useful and ways it will likely be useful in the near-term for all sorts of climate issues.

Now that we’ve touched on real and potential uses for AI in mitigating climate damage, we can talk about the balance of energy consumption from AI with its potential green impacts. So how much energy do the AI applications for climate use? As discussed already, there’s a lot of variability in energy use among different AIs, but there’s a big lack of information here–researchers using these tools often don’t report the energy used to develop or deploy AIs, and private companies using AI don’t report this information either. However, we do know that the biggest, most energy-intensive AIs are not currently being used to address climate change (they mostly focus on language). This means that at least for now, there’s no strong evidence that climate applications of AI will have a net negative effect on sustainable development, despite the lack of green energy.

To summarize, the biggest carbon emissions concerns with AI today have very little to do with how AI can be used for mitigating climate change. Distinguishing these two issues from each other can help us envision better policies for sustainable AI use more broadly and in climate applications specifically. As AI use toward sustainability goals advances, carbon emissions from AI use itself may well become a factor in determining whether a particular AI should be used to address a particular climate issue. We should prepare for and avoid this possibility minimally by regulating data centers for sustainability and optimally by transitioning fully to green energy.